You have just published a new Article on your blog Or a new page on your website, and you have made all the optimizations necessary to improve your natural referencing.

Yes but here it is: all these efforts will be invisible if Google does not indicate your content in its database!

How do you know if your new site, or content, will take an hour or a week to be visible in the search results? How to reduce time between the publication and indexing of your new URL?

We know that theIndexing of content by Google can take from a few days to a few weeks. Fortunately, there are simple measurements that you can take for more efficient indexing. We will see how to speed up the process below.

1. Request indexing from Google

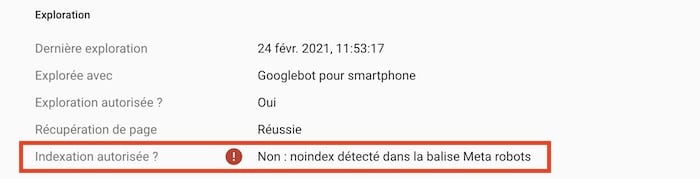

The easiest way to obtain the indexing of your site is to request it via the Google Search Console.

To do this, go to the URL inspection tool and glue the URL you want to see indexed in the search bar. Wait until Google check the URL: if it is not indexed, click the “Request indexing” button.

As mentioned in the introduction, if your site is new, it will not be indexed overnight. In addition, if your site is not properly configured to allow Googlebot To explore it, it is possible that it is not indexed at all. Let's continue!

2. Optimize the Robots.txt file

The file Robots.txt is a file that Googlebot recognizes as an instruction concerning what it should or should not explore. Other search engines robots such as Bing And Yahoo also recognize robot.txt files.

You can use robot.txt files to help exploration robots to give priority to the most important pages, so as not to overload your own queries.

Also check that the pages you want to index are not indicated as non-indexable. Besides, this is our next point on the checklist. Having a well -configured robots.txt. prepare indexing of your site.

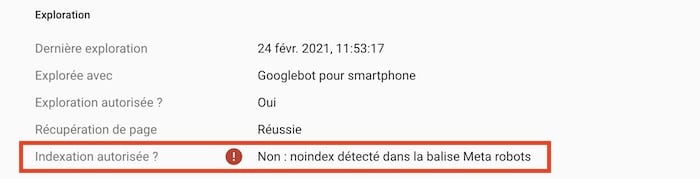

3. Noindex tags

If some of your pages are not indexed, it may be because they have “Noindex” beacons. These tags indicate the search engines not to index the pages.

Check the presence of these two types of tags.

Meta tags

Some Meta tags can impact the referencing of your web pages. This is particularly the case with tags that have the Noiindex attribute.

You can check which pages of your website can have noindex tags by looking for “page Noindex” warnings. If a page is marked as Noindex, delete this tag and submit the URL to Google, so that it is indexed.

X-Robots tags

Using the Google Search Console, you can see which pages have an “X-Robots” beacon in their HTML header.

Use the URL inspection tool: After entering a page, search for the answer to the “Authorized indexing? ».

If you see the words “No: 'Noindex' detected in the” X-Robots-Tag “tag, you know that there is something you need to delete.

4. Use a site plan

Another technique widespread to speed up the Google indexing of your content is to use a sitemap.

It is simply a file that gives Google information on the pages, images or videos present on your website. This also allows you to indicate to the search engine the hierarchy between your pages, as well as the latest updates to take into account.

Using a site plan can be particularly useful for Google indexing in the following cases:

- Your site hosts a lot of different pages and content: this assures you that Google does not forget it.

- You have not connected all your pages together: a site plan allows you to indicate to Google the relations between your different URLs, and to find possible orphans.

- Your site is still too recent to have backlinks or incoming external links: this allows you to alert Google on the presence of your pages.

You can Send your site plan to the Search Console “sitemap” tool and report its presence in your robot.txt file

5. Monitor canonical tags

THE Canonical Tags Indicate the exploration robots if a certain version of a page is preferred.

If a page does not have a canonical tag, Googlebot thinks that this is the favorite page and that it is the only version of this page: it will therefore index this page.

But if a page has a canonical tag, Googlebot assumes that there is another version of this page – and will not indicate the page on which it is, even if this other version does not exist!

Use the Google URL inspection tool to check the presence of canonical beacons.

6. Work internal linking

THE internal links help exploration robots to find your web pages. Your sitemap presents all of the content of your website, which allows you to identify the pages that are not linked.

- The unrelated pages, called “orphan pages” are rarely indexed.

- Eliminate internal links in nofollow. When Googlebot meets Nofollow tags, he signals to Google that he must delete from his index the target link marked.

- Add internal links to your best pages. Robots discover new content by exploring your website and internal links accelerate this process. Rationalize indexing using high -ranking pages to create internal links to your new pages.

7. Get backplinks

The web works a bit like a recommendation system. If other sites share your URLs, it tells Google that it is relevant to reference your pages.

Google recognizes the importance of a page and trusts it when advised by authority. Backlinks from these sites can encourage Google to index a page.

If you find it difficult to reference your pages, ask backlinks to other site publishers.

8. Share on social networks

Google also monitors social networks, including Twitter, for which it seems very fast.

Sharing your new content on these social networks allows you to quickly bring Googlebot to your page. The faster it is explored, the faster it will be indexed.

9. Put a publication date

For blog articles or pages with frequent updates, ensuring that the date of publication or last modification is clearly visible and updated can help Google identify the new content.

This does not guarantee instant indexing, but it can point out to Google that there is new content to explore and potentially to index.

10. Ensure the quality and relevance of the content

Although this is a fundamental aspect of SEO, it is crucial to mention it here because the quality and relevance of the content directly influence Google's desire to index and classify your pages.

High quality, original, informative content responding to user research intentions is more likely to be considered precious by Googlebot and to be quickly indexed.

Google favors content which provides real added value and which are regularly updated. Mentioning this aspect highlights that indexing is not only a technical question, but also of intrinsic value of the content.

Need a SEO consultant to help you reference your site faster and improve your position in search engines? Post a project for free on Codeur.com And receive your first ten quotes an hour!